I made myself quite unpopular at conferences by asking questions as to statistical significance of findings. All scientists want to prove some sweeping new concept or cure a disease, but depending on the scale and sample size, their efforts may not be relevant or useful to the world at large. The vast array in possibilities of SNPs accounts by and large for the frequency with which pharmaceuticals seem prone to causing severe complications including death because they are designed for the many and do not often take into account minute aberrations from "normal".

Many researchers came equipped with graphs and charts in a vast array of data, meant presumably to awe us with the enormity of their conclusions. However, I noticed with alarming frequency an absence of statistics validating the fit of their conclusions (not that that's always a guarantee depending on the frequency and severity of outliers for which we cannot account. More on that later.) I used my time to ask them questions on statistical relevance in order to determine how useful their science might be to me. After all, if I intend to springboard from their conclusions, I want to make sure their claims that appeal to me stand on solid ground.

For my own research, we considered both biological and technical replicates. I learned that lesson in industry at ARUP Laboratories in Salt Lake City. I would sample at least three different biological samples three different times for a total of 9 samples before plotting the data. This tripartate replication in biological and technical capacity helped me determine a better normality of data and isolate aberrations, which were usually due to operator error (me).

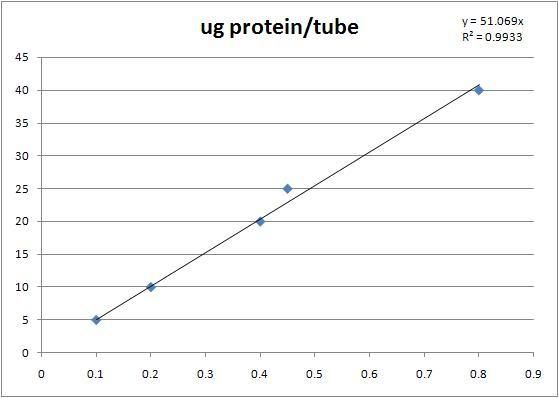

After that, I performed ANOVA, X2, and other tests. Please note that the R2 value in the graph from last entry is 99%. You need not do that much. I was willing to accept a simple standard deviation bar set and an n number representing sample size.

If you test one plant and then tell me you were able to raise its resveratrol levels under enhanced CO2 concentrations to 50x the normal level and then ask me to believe that will be true for every individual grape of every cultivar of every species in the genus, I won't buy it. Congress might, or maybe ASEV, and they may give you money, but it won't be useful to anyone if it was a fluke. Utility is after all what we seek.

Anything worth doing at all is worth doing well. Plus, it would prevent FDA warnings, Pfizer settlements, GSK recalls, ad infinitum, if a few scientists took the time to test a few more samples, especially if their sample size was one. Come on people.

Many researchers came equipped with graphs and charts in a vast array of data, meant presumably to awe us with the enormity of their conclusions. However, I noticed with alarming frequency an absence of statistics validating the fit of their conclusions (not that that's always a guarantee depending on the frequency and severity of outliers for which we cannot account. More on that later.) I used my time to ask them questions on statistical relevance in order to determine how useful their science might be to me. After all, if I intend to springboard from their conclusions, I want to make sure their claims that appeal to me stand on solid ground.

For my own research, we considered both biological and technical replicates. I learned that lesson in industry at ARUP Laboratories in Salt Lake City. I would sample at least three different biological samples three different times for a total of 9 samples before plotting the data. This tripartate replication in biological and technical capacity helped me determine a better normality of data and isolate aberrations, which were usually due to operator error (me).

After that, I performed ANOVA, X2, and other tests. Please note that the R2 value in the graph from last entry is 99%. You need not do that much. I was willing to accept a simple standard deviation bar set and an n number representing sample size.

If you test one plant and then tell me you were able to raise its resveratrol levels under enhanced CO2 concentrations to 50x the normal level and then ask me to believe that will be true for every individual grape of every cultivar of every species in the genus, I won't buy it. Congress might, or maybe ASEV, and they may give you money, but it won't be useful to anyone if it was a fluke. Utility is after all what we seek.

Anything worth doing at all is worth doing well. Plus, it would prevent FDA warnings, Pfizer settlements, GSK recalls, ad infinitum, if a few scientists took the time to test a few more samples, especially if their sample size was one. Come on people.